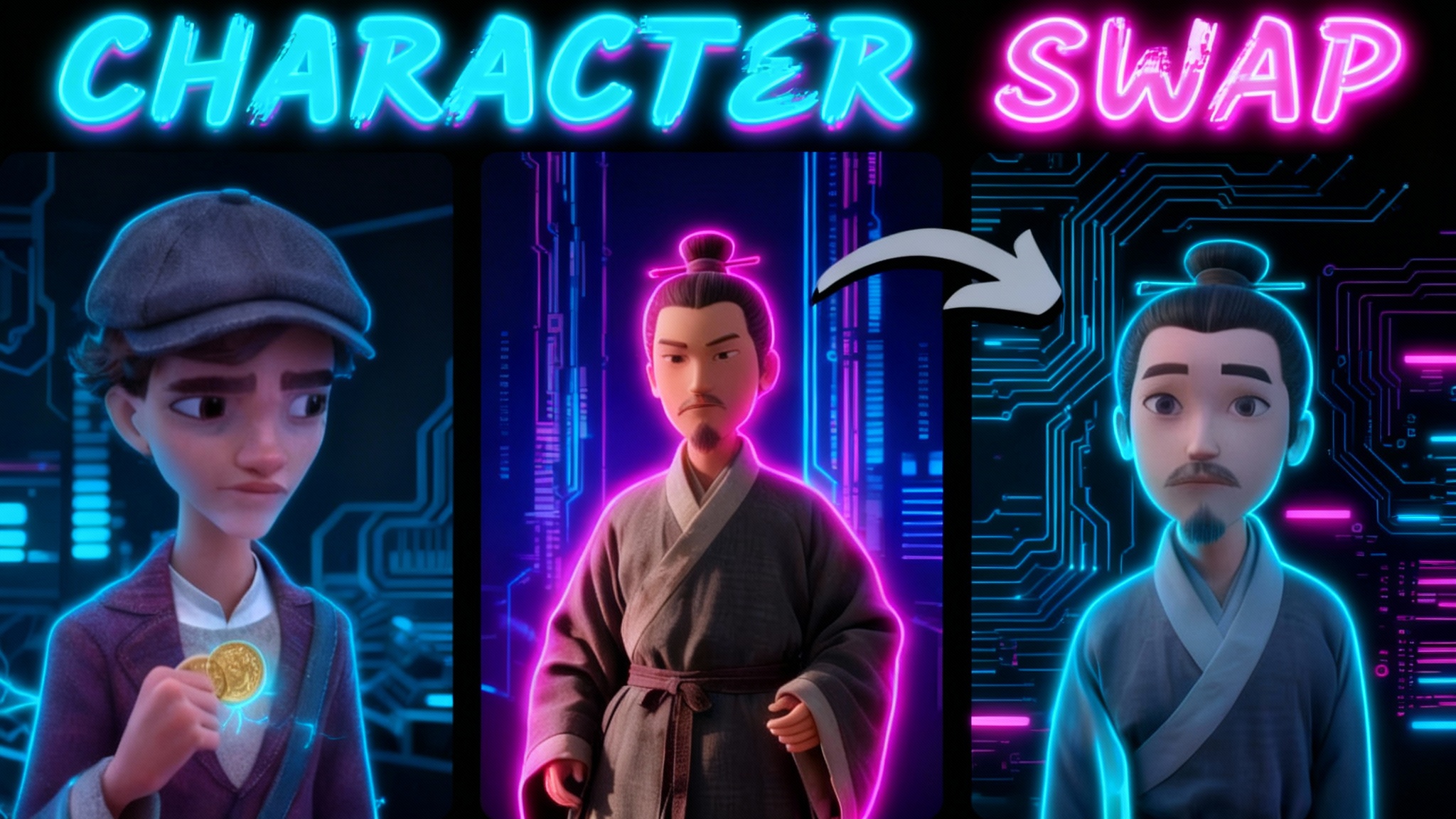

Wan2.2 Animate in ComfyUI: Long Videos & Character Swap

This guide walks through my complete setup and workflow for generating longer videos with character replacement using Wan 2.2 Animate in ComfyUI. I cover installation, missing nodes, model placement, GPU-specific model choices, mask setup, performance tuning, error handling (Triton and Sage Attention), and practical settings for stable output. I keep everything in the order I did it, so you can follow along without skipping steps.

Setup: ComfyUI Portable, Manager, and Update

- I downloaded and installed ComfyUI Portable.

- I installed the ComfyUI Manager to handle nodes and updates.

- I ran the provided update batch file to ensure the environment was current.

- I launched ComfyUI and opened the workflow from the Wan video repository.

When I pasted the workflow into ComfyUI, many nodes were missing. I closed the workflow and installed the missing items one by one using the ComfyUI Node Manager (from the missing node list). After a restart, the highlights for missing nodes were gone.

Models and Files Placement

I kept my existing models folder and placed it into the new ComfyUI installation. The workflow expects specific files in specific folders:

- FP8 models go in the diffusion models folder.

- Smaller GGUF models go in the U-Net folder.

- Links to the required models are included in the workflow notes.

I saw guidance that:

- FP8 models are intended for RTX 4000 series and newer.

- The “E5” model variant is intended for RTX 3000 series and older.

These diffusion models are about 18 GB, which can be heavy for some systems. Smaller GGUF builds are available and should be placed in the U-Net folder. I added links for these GGUF files in the ComfyUI notes.

I selected the primary model in the model selector and had both an FP8 model and a Q4 GGUF model available. I started with the GGUF model.

VAE, CLIP Vision, Loras, and Text Encoder

Below the model, the VAE needs to be set:

- The FP32 VAE is large, but works.

- You can also use the older Wan 2.1 VAE as a fallback if FP32 fails.

- I included a direct download link in the workflow notes so you don’t need to search.

Next:

- Add the CLIP Vision file. I linked it in the notes.

- The workflow uses two Loras. Both links are already provided.

- If you have more VRAM, consider disabling the “block swap” node or reducing “blocks to swap.” The more you swap, the slower generation becomes.

- The text encoder link is included in the notes. The large file can be replaced with a smaller FP8 variant found lower in the list. I used the smaller one.

I left the remaining settings at their defaults.

Preparing Inputs: Character and Source Video

- I uploaded the image of the character I want in motion.

- The video frames are analyzed for character swapping in a dedicated section of the workflow.

- I uploaded a video with 109 frames.

Pointers are used on each frame to mark coordinates for character localization. The workflow shows a mask preview for the swap area:

- The black region represents the target swap region.

- Green circles indicate areas to include in the mask.

- Red circles indicate areas to exclude.

I bypassed the generation nodes below to preview the mask without starting the full video generation. A segmentation file is downloaded automatically if not already present. In my case, it was already in the models folder, so no download was triggered.

To adjust the mask:

- Hold Shift and use left/right click to add more pointers.

- Use the clear button to remove existing pointers.

- Run the preview to verify the mask is correct.

The workflow also identifies the face from the input video for lip movement and expression matching.

First Run and Initial Errors

I activated the generation nodes and ran the workflow. I initially forgot to provide the swap image, so I added it and ran again.

First error:

- Missing “Sage Attention.” I switched the attention type to SDPA to continue.

Frame windows:

- The 109 frames were divided into windows based on a setting of 77.

- I encountered an error: “Cannot find Triton.”

Notes on frame windows:

- The value (77) represents the number of frames per window.

- Increasing beyond ~80 can reduce quality.

- The program attempted to process 77 frames when the Triton error occurred.

Fixing Triton

I installed Triton following the repository instructions. Here’s what I did:

-

Install the required Microsoft Visual C++ redistributable (as listed in the repo). Double-click to install.

-

Open the Python environment inside ComfyUI Portable:

- Go to the “python_embedded” folder in your ComfyUI Portable directory.

- Open a command prompt in that folder.

- Remove any existing Triton:

pip uninstall -y triton

- Check your Torch version:

pip list | findstr torch

- The guide specified that PyTorch 2.8 pairs with Triton 3.4.

- Install a Triton version lower than 3.5:

pip install "triton<3.5"

If you need a specific version based on your PyTorch, install it directly (for example):

pip install triton==3.4.0

I saved the workflow and restarted ComfyUI.

Performance Notes: Resolution, Steps, and Windows

After restarting:

- I queued a run with windows of 77 frames.

- One step took about 61 seconds at ~800 px resolution on a 16 GB RTX 4060, which was slow.

I canceled the generation, but it didn’t stop gracefully. I force-terminated the process, relaunched, and lowered the resolution to maintain aspect ratio while improving speed. At lower resolution, each step took roughly 18 seconds. You can upscale the final output or increase resolution later if needed.

I noticed the second window processed 77 frames again instead of only the remaining frames, which can lead to sections without audio and odd motion if your source video has fewer frames (mine had only 109). To avoid this, divide your total frames into clean batches that match your window settings.

That run took about 260 seconds. Using Sage Attention should speed things up.

Mask Shift After Resolution Changes

I saw that the swapped section didn’t align with the intended subject. The mask area shifted to the right, and the green/yellow pointers moved. This happened because I changed the resolution, making the video preview box smaller.

To tighten the mask, I adjusted the “block mask” value:

- I set it to 32, which made the mask boxes smaller and more precise.

With that, the workflow ran correctly but produced a few extra frames.

Controlling Frame Windows and Extra Frames

To fix the extra frames, I adjusted the window and frame settings above:

- Wan 2.2 video generation works well with an 80-frame window.

- I kept one window and removed 28 frames to land at 81 frames processed in a single window.

- I skipped the first 28 frames and set the window value to 81 to avoid a second window.

- I queued the run again.

The result was consistent. Lighting on the character stayed the same with the smaller model; larger models can affect lighting more noticeably. That run took about 150 seconds. The window reported 85 frames in the log, and with these settings I can handle longer outputs with multiple 85-frame windows.

Installing Sage Attention

Sage Attention offers speed gains. Here is how I installed it:

- Find the correct release for your environment.

- In the Python embedded folder, check your Torch and Python versions:

python -c "import torch,sys; print('torch', torch.__version__, 'py', sys.version)"

- CP39 corresponds to Python 3.9.

- Match the Torch version; CUDA tags may differ across builds.

-

Download the appropriate wheel (.whl) and save it into the Python embedded folder.

-

Install the wheel locally:

pip install .\SageAttention-<matching>-cp39-cp39-win_amd64.whl

The installer reported success.

I realized I had missed one step mentioned for Triton:

- A ZIP package for Python 3.13 was listed. The contents should be copied into the Python embedded folder. I did that as instructed.

I restarted ComfyUI, selected Sage Attention, and queued the workflow. One step took about 14 seconds, but the overall time was similar on the first run. The second run may be faster due to caching.

In my case, the ZIP content didn’t seem to affect the result directly, but I followed the instruction and kept it in place.

Testing Larger Variants and GGUF Modes

I selected an FP8 model larger than my GPU memory and paired it with a BF16 VAE. The generation time was about 170 seconds and worked for longer sequences as well.

New GGUF modes appeared over time; for example, “Q4 KS” was uploaded during my session. These can be switched in the model selector. Keep in mind:

- GGUF files belong in the U-Net folder.

- Diffusion models (FP8 or E5) belong in the diffusion models folder.

Output Tip: Single Video Assembly

To get a single combined video as the result, connect the image directly to the designated output path in the workflow as indicated. This consolidates outputs into one video file.

Table Overview: Models, Files, Folders, and Notes

| Component | Recommended Variant | Folder Location | Notes |

|---|---|---|---|

| Diffusion Model | FP8 (RTX 4000+), E5 (3000-) | diffusion models | ~18 GB files; pick based on GPU generation. |

| GGUF Model | Q4 (e.g., Q4 KS) | U-Net | Smaller footprint; add link in workflow notes. |

| VAE | FP32 or Wan 2.1 VAE | VAE | FP32 is large; older 2.1 VAE can be a fallback; BF16 also works. |

| CLIP Vision | Matching release | CLIP or clip_vision | Link included in workflow notes. |

| Text Encoder | Smaller FP8 variant | text_encoders | Use the smaller FP8 file to reduce memory usage. |

| Loras (2) | Provided in notes | loras | Included in the workflow notes. |

| Triton | 3.4 for Torch 2.8 | Python embedded (pip install) | Uninstall older Triton; install <3.5 per Torch version. |

| Sage Attention | Wheel matching CP/PyTorch | Python embedded (pip install) | Install the wheel; confirm torch/python versions first. |

| VC++ Runtime | Per repo step | System | Required for Triton/Sage builds. |

| Python ZIP (3.13) | As instructed | Python embedded | Copy contents into Python embedded if required by the guide. |

Folder names may vary slightly by ComfyUI build. Use the standard ComfyUI/models subfolders consistent with your setup.

Key Features Used in the Workflow

- Character replacement via mask-guided segmentation.

- Face identification for lip movement and expression matching.

- Manual pointer controls to refine mask (Shift + L/R click, clear, re-run preview).

- Frame windowing for long video generation (e.g., 77–85 frames per window).

- Attention backends: SDPA or Sage Attention.

- Performance tuning:

- Resolution scaling for speed vs. quality.

- “Block swap” toggle and “blocks to swap” count for VRAM-constrained systems.

- “Block mask” size for precise localization.

- Skipping frames to keep a single window for stable results.

How to Use: Step-by-Step

- Install ComfyUI Portable

- Extract and run the portable build.

- Install ComfyUI Manager.

- Run the update batch file.

- Load the Wan 2.2 Animate Workflow

- Open ComfyUI.

- Copy the workflow from the Wan video repository and paste it into ComfyUI.

- If nodes are missing, use the Node Manager’s missing list to install them.

- Restart ComfyUI and confirm all missing node highlights are gone.

- Prepare Models and Files

- Place FP8 diffusion models in the diffusion models folder (targeting RTX 4000+).

- Place E5 models for RTX 3000 and older GPUs if needed.

- Place GGUF files (e.g., Q4/Q4 KS) in the U-Net folder.

- Add VAE (FP32 or Wan 2.1 VAE) in the VAE folder.

- Add CLIP Vision, Text Encoder (smaller FP8 variant), and required Loras in their folders.

- The workflow notes include all relevant links.

- Configure the Workflow

- Select your primary model (FP8, E5, or GGUF).

- Set the VAE file (try FP32; fallback to Wan 2.1 if needed).

- Set CLIP Vision and Text Encoder (use the smaller FP8 encoder).

- Keep “block swap” enabled only when VRAM is tight; higher swap count slows generation.

- Adjust “block mask” (e.g., 32) for tighter mask boxes if needed.

- Prepare Inputs

- Upload the character image to be swapped in.

- Upload the source video (e.g., 109 frames).

- Bypass generation nodes to preview the mask first.

- Calibrate the Mask

- Add green/red pointers to refine inclusion/exclusion.

- Shift + Left/Right click to add points.

- Clear pointers if necessary.

- Run the preview until the mask matches the subject.

- First Generation Run

- Turn on the generation nodes and queue the run.

- If “Sage Attention” is missing, switch to SDPA temporarily.

- If you see “Cannot find Triton,” follow the Triton install steps below.

- Install Triton (if missing)

- Install the VC++ redistributable from the repo.

- In the Python embedded folder:

- Uninstall old Triton:

pip uninstall -y triton - Check Torch version:

pip list | findstr torch - Install Triton that matches Torch (for Torch 2.8, use 3.4; keep <3.5):

pip install "triton<3.5"

- Uninstall old Triton:

- Restart ComfyUI.

- Performance Tuning

- Lower resolution if steps are too slow; you can upscale later.

- Expect each window to process a set number of frames (e.g., 77–85).

- Avoid odd audio/motion by aligning windows with your total frame count.

- If the mask shifts after changing resolution, adjust “block mask” and re-check pointers.

- Control Window Size and Skipped Frames

- Wan video runs well with an ~80-frame window.

- For a 109-frame input:

- Skip the first 28 frames and set the window size to 81 to keep one window.

- This avoids a second window and extra trailing frames.

- Re-queue and render.

- Install Sage Attention (for speed)

- Confirm your Python/Torch versions:

python -c "import torch,sys; print('torch', torch.__version__, 'py', sys.version)" - Download the matching Sage Attention wheel for your CP and Torch.

- Place the wheel in the Python embedded folder.

- Install locally:

pip install .\SageAttention-<matching>-cp39-cp39-win_amd64.whl - If instructed, copy the Python 3.13 ZIP contents into Python embedded.

- Restart ComfyUI, select Sage Attention, and test generation.

- Final Output

- If you want a single assembled video, connect the image directly to the final output path in the workflow.

- For longer videos, repeat with multiple windows (e.g., 85 frames each) while monitoring quality.

Practical Notes and Observations

- Step time at ~800 px on a 16 GB RTX 4060 was about 61 seconds with SDPA; lowering resolution reduced it to ~18 seconds.

- First run with Sage Attention showed ~14 seconds per step; total time may improve on subsequent runs.

- Lighting on the swapped character may remain stable with smaller models; larger models can change lighting more noticeably.

- If a run hangs, you may need to force-terminate and restart ComfyUI.

- Links to Triton and Sage Attention are included in the workflow notes.

- The workflow appears in the browser section under “Wan video wrapper,” and installed node packages are listed there as well.

FAQs

Q: Where do I put FP8, E5, and GGUF models?

- FP8/E5 diffusion models go in the diffusion models folder.

- GGUF models go in the U-Net folder.

- VAE, CLIP Vision, Text Encoder, and Loras go in their respective folders.

- All links are in the workflow notes.

Q: Which model should I pick for my GPU?

- RTX 4000 series or newer: FP8.

- RTX 3000 series and older: E5.

- GGUF variants (e.g., Q4/Q4 KS) are smaller and go in the U-Net folder.

Q: I get “Cannot find Triton.” What do I do?

- Install the VC++ runtime (per repo).

- Uninstall any existing Triton and install a version that matches your Torch (e.g., “triton<3.5,” with 3.4 for Torch 2.8).

- Restart ComfyUI.

Q: Sage Attention is missing. How do I enable it?

- Download the Sage Attention wheel matching your Python (CP tag) and Torch version.

- Install it locally from the Python embedded folder.

- Restart ComfyUI and select Sage Attention in the workflow.

Q: My mask shifted after changing resolution. How do I fix it?

- Adjust the “block mask” value (e.g., 32) for smaller, more precise boxes.

- Re-check and add pointers (Shift + clicks), then re-run the preview.

Q: Why are there extra frames or silent sections?

- Window size may not align with your total frame count.

- Keep one window around ~80 frames or divide frames evenly between windows.

- You can skip frames to fit a single window (e.g., skip 28 to run 81 frames in one window).

Q: Is there a quality loss with windows >80 frames?

- Yes, pushing beyond ~80 can impact quality. Keep windows around 77–85.

Q: My steps are slow. How can I speed up?

- Lower resolution and upscale later.

- Use Sage Attention if available.

- Reduce “blocks to swap” or disable block swap on larger VRAM GPUs.

Q: The segmentation file didn’t download. Is that an issue?

- If the required segmentation model is already present in the models folder, ComfyUI won’t re-download it. That’s fine.

Q: How do I get a single combined video output?

- Connect the image directly to the final video output in the workflow.

Conclusion

I set up ComfyUI Portable, installed the Wan 2.2 Animate workflow, and resolved missing nodes. I placed FP8/E5 diffusion models and GGUF U-Net models in the correct folders, configured VAE, CLIP Vision, Text Encoder, and Loras, and tuned “block swap” and “block mask” based on performance and precision needs. I prepared the character image and source video, refined the mask with pointers, and tested with SDPA first.

When Triton errors appeared, I installed the correct Triton version for my Torch, then moved to Sage Attention for faster steps. I managed frame windows by keeping around 80 frames per window, skipping frames to avoid extra windows, and aligning settings to maintain quality. I tested larger variants and confirmed longer outputs work with multiple windows.

Recent Posts

Wan 2.2 Animate Guide: The Best AI Character Animation Yet

Discover why Wan 2.2 Animate sets a new standard for AI character animation. Learn how it works, fluid character swaps, step-by-step setup, and pro tips.

Animate Any Image in ComfyUI with WAN 2.2 with GGUF

Step-by-step ComfyUI workflow using WAN 2.2 + GGUF to animate any image with realistic motion, cloth sim, and 10+ sec clips—no VRAM limits.

Wan 2.2 Animate: AI Character Swap & Lip‑Sync in ComfyUI

Learn AI character swap and lip‑sync in ComfyUI with Wan 2.2 Animate—drive motion from your video and restyle shots, all free, step by step.